Following my previous successes in streaming audio to an Apple II, then video to an Apple II, I combined both to stream audio and video at the same time. This is what I found to achieve this.

The result

Generalities

The solution involves two serial ports, both running at 115200bps, giving me a bandwidth of 11.52kB/s per port. Server-side, sound is streamed to one port at 11.52kHz, and every 480 samples, the audio thread signals the video thread to push a frame to the other port, with a delta-based algorithm that pushes only changed bytes since the last frame.

Of course, a frame’s difference to the previous one can be more or less big, and while some frames can be pushed in the time budget, others can’t: at 11.52kBps and 24fps, I can only send 480 bytes per frame in order to reach zero frame drop. But, for example, a camera switch could involve repainting the whole frame. In these cases, the video thread will skip frames if it is late (that is, the audio thread signalled it to push one or more frames while it was still busy pushing one).

Client-side, we will have to render sound samples using PWM modulation, so this means that the client’s busy-loop has to complete in a fixed amount of CPU cycles. I have chosen to fix that amount of CPU cycles to 68, as it is shorter than the 86µs that separate two bytes on the serial port, and allows me to do 11.5kHz sound. Of those cycles, 39 cycles are allocated to the sound. As toggling the speaker takes 4 cycles, and we have to toggle it twice per sample, this allows for 32 distinct PWM levels to be represented:

- at level zero, we toggle (4 cycles), toggle (4 cycles), and wait (or do something else) for 31 cycles

- at level 15, we toggle (4 cycles), do something else (15), toggle (4), do something else (16)

- at level 31, we toggle (4 cycles), do something else (31), toggle (4).

These 32 level handlers are stored in memory one page apart from the other, so that we can jump to $XX00, where XX is the next sample level, at the end of a sample, by needing a single byte per audio sample. (For 6502. with a 65c02, we can use jmp ($nnnn,x) and have multiple handlers per page).

Once we’re done with the speaker, we jump to the video handler (3 cycles). Then the video handler has 20 cycles to handle the video byte before jumping to the next sample handler (6 cycles this time, as there would be too many differents jump destinations to self-patch, I use the jmp (indirect) instruction).

“Do something else” during the speaker PWM cycles includes necessary things, like loading the next video byte from one port, loading the next audio byte from the other one, and bells and whistles (keyboard handling, etc).

The audio stream

Correctly checking if a byte is available on the video serial port means looking at the relevant flag in the ACIA 6551 status register, and only then fetching the byte from the data register, which is expensive: 12 cycles minimum. For this reason, we don’t have enough cycles left to correctly check for the availability of a new audio byte, and hence, take a shortcut: on every duty cycle, the byte from the audio serial port’s data register is fetched. “At worst, it’ll be the same one than previously than the last one”, I thought.

Wrong: at worst, we’ll be reading the data register at the precise moment when the ACIA updates it; and it does not update it atomically, but bit-by-bit (to be precise, according to documentation, low-bit to high-bit). This means we can load a corrupt (half-new, half-old) audio sample when (not if) we’re unlucky.

This gives us some level of concern: as we’re using this byte as the high-byte of the next duty cycle’s address, if the byte is wrong, we’re going to jump in the wrong place; and if the byte isn’t in the expected range, we’ll jump outside of any duty cycle handlers, and crash.

Luckily, we can downgrade the severity of such a bug to “innocuous annoyance” in the same that if we miss an audio byte, we’ll only glitch one sample: by making sure that reading an half-old-half-new byte will, in any case, land us in the correct interval.

I have done this by storing my duty cycles handlers in a way that each value has the same number of meaningful bits, the other ones being constant. Examples:

| Hexadecimal value | Binary value |

| $00 | 00000000 |

| $31 | 00011111 |

| $60 | 01100000 |

| $7F | 01111111 |

| $64 | 01100100 |

| $83 | 10000011 |

As you can see, the three first bits of $60 to $7F are always 011, and then the whole range from 00000 to 11111 is covered. Same for $00 to $31, where the first three bits are always 000.

On the contrary, for $64 to $83, all bits, starting from the first, can change. Reading half a new $83 over half an old $64 just at the time where the ACIA would have updated one bit, for example, would lead to reading 11100100 ($E4). If the ACIA had time to write four bits, we would read 10000100 ($84). In both cases, we would then attempt to jump to $E400 or $8400, where there is no duty cycle handler, and crash miserably.

So, when using a direct jmp we want our handlers at $6000, $6100, …, $7F00. When using jmp ($nnnn,x), we want X to be $00 to $31.

The video stream

For the video stream, the algorithm is derived from my previous video-only streamer, with some optimisations stripped away so that I can complete handling in 20 cycles (26 including the final jmp) whichever the code path is.

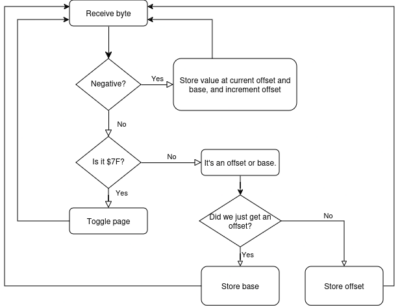

On the proxy side, our video is downscaled, dithered to black and white using an ordered dithering algorithm to minimize the inter-frames difference, and each frame is then converted to its HGR representation. From there, the only thing remaining to do is identify the bytes differing between the previous frame’s HGR representation and this one, and send the differences to the Apple II using the least possible amount of bytes. This is basically delta encoding. We have three things to tell the Apple II:

- Where to store a video data byte

- The value of the data byte to store

- When to toggle the HGR page for double-buffering.

For the “where”, I use the same as previously: client and server both divide an HGR page into 65 “bases”, and each of these “bases” consists of 126 bytes. This allows to represent a whole HGR page using 2 bytes with only 7 bits per byte, leaving the high bit unused.

As the high bit of an HGR byte is also unused in monochrome mode, the high bit of my video bytes will be used to distinguish whether a given byte is a “where” or a “what” byte. And, as 127 is not a valid value for an offset inside a base, 127 will be used to toggle the HGR page.

Here is the algorithm’s core. All of the codes path fit, quite tightly, in 20 cycles. The worst one is the base change, requiring one indirect-indexed load, one indexed load and two zero-page stores (15 cycles) after the various checks (5 cycles). This algorithm allows for fixed-time access to any region of the Apple II memory, which is good for video with subtitles (subtitles land in 0x400-0xC00, while video lands in 0x2000-0x6000).

Note: if anyone has a better idea to represent changes across frames in a more compact way, requiring less bandwidth (but not more cycles, the budget’s real tight and we start losing bytes by adding 6 cycles to the loop), I’m very interested!

Bells and whistles

A few extra features exist: Pause, rewind, forward, toggle subtitles. These require reading the keyboard and, most of the time, sending the command to the proxy. Reading the keyboard and sending the command is done in the idle cycles of the level 15 handler; for subtitles toggling, the rest is done in the idle cycles of level 16.