Comment corriger l’absence d’image mise en avant d’un post Wordpress lorsqu’on le partage sur Mastodon.

27/11/2022 Lire la suite...-

-

Comment héberger son site : conseils de base

Comment faire pour ne plus dépendre de multinationales pour être sur internet ? Tour d’horizon de l’hébergement pour tou·te·s

18/11/2022 Lire la suite... -

Automatisation du déploiement d’ESP32 via Ansible

EspHome ne simplifie pas tout à fait assez le déploiement d’ESP32 à mon goût. Déployons des ESP32 avec Ansible !

16/11/2022 Lire la suite... -

Éviter de se retrouver coincé·e par le 2FA

L’authentification à double facteur (2FA) est maintenant quasi-indispensable. Mais attention à ne pas se retrouver coincé·e partout si/quand notre smartphone meurt d’une mort violente.

09/11/2022 Lire la suite... -

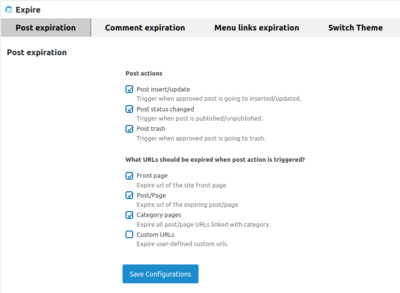

Accélérer WordPress, partie 3 : Varnish

Pourquoi recalculer des pages quasi-statiques quand on peut ressortir le résultat d’un cache hyper-rapide, Varnish par exemple ?

20/03/2022 Lire la suite...